|

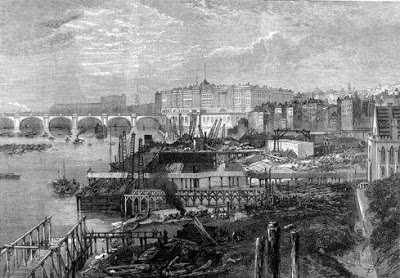

| Sewers under construction, north bank of the Thames looking west. Image from End of More. |

The end of more:

Can we ‘downsize’ and survive?

We continue to delude ourselves that ‘downsizing’ will somehow allow us to carry on with our current lifestyle with perhaps only minor inconveniences.

By Norman Pagett and Josephine Smit / The End of More / April 25, 2013

“Healthy citizens are the greatest asset any country can have.” — Winston Churchill

LONDON — Faced with inevitable decline in our access to hydrocarbon resources, we read of numerous ways in which we will have to downsize, use less, work less, grow our own food, use goods and services close to home, consume only what we can manufacture within our own personal environment, or within walking distance.

If we are to survive, we must “live local” because the means to exist in any other context is likely to become very difficult. There is rarely, if ever, any mention of the healthcare we currently enjoy, which has given us a reasonably fit and healthy 80-year average lifespan.

There seems to be a strange expectation that we will remain as healthy as we are now, or become even healthier still through a less stressful lifestyle of bucolic bliss, tending our vegetable gardens and chicken coops, irrespective of any other problems we face.

And while “downsizing” — a somewhat bizarre concept in itself — might affect every other aspect of our lives, it will not apply to doctors, medical staff, hospitals and the vast power-hungry pharmaceutical factories and supply chains that give them round the clock backup.

Nor does downsizing appear to apply to the other emergency services we can call on if our home is on fire or those of criminal intent wish to relieve us of what is rightfully ours. Alternative lifestylers seem to have blanked out the detail that fire engines, ambulances and police cars need fuel, and the people who man them need to get paid, fed, and moved around quickly.

In other words “we” can reduce our imprint on the environment, as long as those who support our way of life do not. Humanity, at least our “Western” developed segment of it, is enjoying a phase of good health and longevity that is an anomaly in historical terms. There is a refusal to recognize that our health and well-being will only last as long as we have cheap hydrocarbon energy available to support it.

Only 150 years ago average life expectancy was around 40 years and medical care was primitive, basic, and dangerous. Children had only a 50/50 chance of reaching their fifth birthday. Death was accepted as unfortunate and inevitable, but big families ultimately allowed survival of a few offspring to maturity, which gave some insurance against the inevitable privations of old age.

The causes of disease, many of which we know to be the result of the filth and chaos of crowded living, contaminated water. and sewage, were merely guessed at. The overpowering smell of this waste was generally accepted as a cause of a great deal of otherwise unexplained sickness.

Even the ancient Romans built their sewers to contain the smells they considered dangerous; getting rid of sewage was a bonus. Malaria literally meant “bad air,” and the name of the disease has stayed with us even though we now know its true cause.

Prevailing winds

As cities developed, particularly in Europe, the more prosperous quarters were, and still are, built in the south and west, to take advantage of the general prevailing winds blowing the smells of the city eastwards. Thus the east side of many cities had to endure the industrialization that created the prosperity of the western suburbs.

In many respects the populations of European cities of the eighteenth and nineteenth centuries reflected the problems of our own times: they were growing faster than any means could be found to sustain them. Cities were seen as sources of wealth and prosperity, so people crowded together in them, but in so doing they created the seedbeds for the diseases that were making the cities ultimately untenable.

To quote from Samuel Pepys’ Diary:

This morning one came to me to advise with me where to make me a window into my cellar in lieu of one that Sir W Batten had stopped up; and going down into my cellar to look, I put my foot into a great heap of turds, by which I find that My Turner’s house of office is full and comes into my cellar, which doth trouble me. October 20th 1660; …

People were being debilitated and killed by the toxicity of their own wastes and that of the animals used for muscle power and food. By 1810 the million inhabitants of London (by then the biggest city in the world) used 200,000 cesspits; their contents could only be cleared out manually and so were usually neglected. Waste simply accumulated because no authority took final responsibility for doing anything about it, and any laws on the matter were widely flouted.

By the 1840s, water closets were coming into general use in more affluent homes through the availability of pumped water. While these were seen as an improvement on the chamber pots of previous eras, the water closets resulted in greater quantities of water flowing into the cesspits.

This water in turn overflowed into street drains that had only been created to take rainwater into ditches and tributaries of the River Thames. Improvements in personal hygiene, allowing the upper classes to “flush and forget,” had unwittingly created an even bigger danger to public health for everyone else.

Cities and towns were expanding under the pressure of industrialization, but by continuing to use a pre-industrial infrastructure of waste disposal they were being constantly hit by outbreaks of diseases that swept through huddled tenements and luxury homes alike.

Draw off points for public drinking water were often carelessly close to sewage discharges, or the water came from town wells that were contaminated by overflowing cesspits. Cholera and typhoid fever became the scourge of Victorian London.

The Thames as it ran through the city became an open sewer, as tidal flows washed effluent back and forth twice a day. It was a problem that grew throughout the early part of the nineteenth century, culminating in the unusually hot summer of 1858 when bacteria thriving in the fetid water created what became known as the “great stink.”

Even the business of government itself was overcome, and plans were made to evacuate parliament to Oxford or St Albans, such was the overpowering stench of the river. Even curtains soaked in chloride of lime could not counteract the smell of raw sewage coming up from the Thames outside, but at least it focused minds and money on the problem.

Numerous proposals were made to deal with it, but only Joseph Bazalgette, chief engineer of the London Metropolitan Board of Works, came up with a workable solution. This was a truly stupendous undertaking that involved building 82 miles of intercepting sewers on the north and south banks of the Thames serving 450 miles of main sewers, linking to 13,000 miles of minor street drains. The completed system could deal with a daily waste output of half a million gallons of sewage.

The sewers were designed to take the raw effluent out to the coast to the north and south of London by gravity, terminating in giant pumping stations driven by Cornish beam engines each needing 5,000 tons of coal a year to keep them running. They lifted the sewage into giant reservoirs that discharged it out to sea on ebb tides. No attempt was made to treat the sewage, merely to get rid of it.

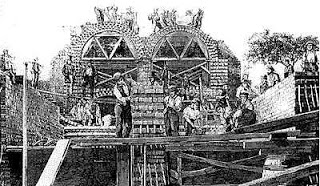

To build those sewers required 315 million bricks, and almost a million tons of mortar and cement. You can’t make bricks and mortar without heat, and lots of it. The only source of heat on that scale was coal, which could only be got in quantity by deep mining. With the heat energy from coal, Victorian engineers could manufacture top quality bricks by the million in enormous new kilns, rather than on the relatively small scale previously allowed by using wood as a heat source.

|

| London embankment sewer brickwork under construction. Image from End of More. |

A marvel of Victorian engineering

The entire scheme was completed between 1856 and 1870 and was a marvel of Victorian engineering, but it was only made feasible by fossil fuel energy. Coal from deep mines had only become widely available in the late 1700s, when the invention of the viable steam engine allowed miners to pump out flood water from deep shafts (the same type of steam engines that pumped the sewage to the sea).

Bazalgette’s enterprise was the biggest undertaking of civil works in the world at that time, and from firing the bricks to discharging waste into the open sea it depended entirely on the availability of cheap energy from coal. Even the delivery of the bricks and materials into the heart of the city could only have been done by the recently constructed steam powered railways.

The sewer system is out of sight and largely out of mind but remains a stark example of how we need continual energy inputs at the most basic level to sustain our health. The same sewers still keep London healthy today, and they discharge a hundred times the volume anticipated by Bazalgette’s original design.

It was ironic that burning cheap coal would save thousands of lives in the capital city by providing the means to build its sewers, while simultaneously causing thousands of deaths over the following century by poisoning its air until the introduction of the clean air act in 1956.

Every developed town and city across the world now safeguards the health of its citizens in the same way, by pumping away wastes to a safe distance before treatment. But to do it there must be constant availability of hydrocarbon energy. Electricity will enable you to pump water and sewage but it cannot provide all the infrastructure needed to build or maintain a fresh water or waste treatment plant; for that you need oil, coal, and gas.

Modern domestic plumbing systems are now made largely of plastic, which is manufactured exclusively from oil feedstock, while concrete main sewer pipes are produced using processes that are equally energy intensive. The safe discharge of human waste and the input of fresh water have been critical to health and prosperity across the developed world, yet we continue to delude ourselves that “downsizing” will somehow allow us to carry on with our current lifestyle with perhaps only minor inconveniences.

But we are even more deluded when it comes to the medical profession and all of the advanced treatments and technologies it can provide to keep us in good health for ever longer lifespans and make our lives as pain-free as possible. We have a blind faith that we can continue to benefit from a highly complex, energy-intensive healthcare system, irrespective of what happens to our energy supplies.

We read of the conditions endured by our not-so-distant forebears, and recoil in horror at the prevalence of the dirt and diseases they had to accept as part of their lives. We should perhaps stop to consider that they did not have the means to make it otherwise. In the absence of any real medical help, people who could afford them carried a pomander, a small container of scented herbs held to the nose as some kind of protection against disease and the worst of the city odours.

We think of ourselves as somehow different, but our modern health system will survive only as long as the modern day pomander of our hydrocarbon shield is there to protect it.

The last century saw massive advances in healthcare, driven by both fossil fuel and world war. The new technology and energy sources available at the start of the First World War allowed killing on an industrial scale but it also drove innovation and industrialization of medical care. The war saw the development of the triage system of prioritizing treatment for the wounded, and new means of transporting patients away from the dangers of the battlefield quickly.

In 1914 Marie Curie adapted her X-ray equipment into mobile units, specifically designed to be used in battlefield conditions. At the same time, disease was being contained with the help of mobile laboratories, tetanus antitoxin, and vaccination against typhoid. All this was no defence against the virus of the so called Spanish flu, which broke out and spread among troops and civilians alike, killing more people than the previous four years of conflict in a pandemic that ran from 1918 till 1920.

The war had killed 37 million people, and estimates put the total number of fatalities of the flu epidemic at up to another 50 million, but even those enormous numbers show as barely a blip when we look back on the inexorable rise in population in the last century.

Laying a foundation for modern medical care

The skills that had been employed to create the sewage disposal and fresh water pumping works of the nineteenth century now provided the foundations for making medical care and childbirth cleaner and safer in the twentieth.

But every innovation demanded energy input. Even the production of chlorine based bleach, which kills the bacteria of tetanus, cholera, typhus, carbuncle, hepatitis, enterovirus, streptococcus, and staphylococcus, and which we now take for granted, would not have been be possible without the industrial backup to manufacture and distribute it.

Incorrectly handled, chlorine will kill almost anything, including us. Progress in healthcare might have appeared slow to those involved, but in historical terms it began to move rapidly. Fossil fuel energy provided a cleaner environment for humanity to breed, and we began to make up the numbers lost between 1914 and 1920.

While human ingenuity was critical to such rapid progress, none of it would have been possible without the driving force of oil, coal, and gas. Our collective health today still hangs by that thread of hydrocarbon.

As the industrial power of nations forced technology ahead at an ever increasing pace after World War One, the underlying energy driving our factory production systems increased general prosperity, and that in turn financed research into unknown areas of disease.

Alexander Fleming, professor of bacteriology at St Mary’s Hospital in London first identified Penicillium mould in a petri dish in his laboratory in 1928, and began to recognize its potential for preventing post-surgical wound infections. But its full potential was not brought into play until World War Two, just over a decade later.

The drug had been created on the laboratory bench, but it needed the power of energy-driven industry to make it available in quantity. Constraints in Britain’s wartime manufacturing capacity meant that production had to be carried out in the U.S., and even there it proved difficult to refine the process to produce penicillin on an industrial scale.

John L Smith, who was to become president and chairman of Pfizer and who worked on the deep-tank fermentation process that provided a successful solution to large scale production, said of penicillin:

The mold is as temperamental as an opera singer, the yields are low, the isolation is difficult, the extraction is murder, the purification invites disaster, and the assay is unsatisfactory.

Even with the power of American industry behind it, penicillin only became available for limited use on war wounds by 1944/5, and was not made available for general use until after the war.

For little more than a century developments in safe drinking water supply, sanitation, and medical science have allowed us progressively to tackle many once-fatal diseases and illnesses. We minimized the risk of infection and created vaccines, cures, or life-prolonging treatments for everything from measles to cancers.

Western affluence and medical technologies support lives that would not otherwise be viable, for those who are born prematurely or who suffer serious injury, disability or illness. Medical treatment now incorporates preventative measures to extend lives and keep people in “perfect” health for as long as possible. As a result, average life expectancy across the global population has grown from just under 50 years in the 1950s to 67 years today.

So-called “miracle” drugs gave man a sense of omnipotence that tipped into hubris when, in 1969, U.S. Surgeon General William Stewart, was reported to have said it was time to “close the book on infectious disease.”

Fighting a losing battle

But we have not closed that book, nor are we likely to. Sir Alexander Fleming forecast that bacteria killed by his new wonder drug would eventually mutate a resistance to it. Within decades the effectiveness of antibiotics in tackling staphylococcus aureus bacteria was diminishing and the methicilin-resistant staphylococcus aureus, or MRSA “superbug,” was taking hold.

It is easy to forget that before the development of the antibiotic the medical profession could provide no effective cure for infections such as pneumonia, and a slight scratch from a rose thorn bush could be enough to cause death from blood poisoning.

We are fighting a losing battle against nature; bacteria will always win the war of numbers. No matter what medication we add to our arsenal, bacteria will always mutate to resist it. Since the emergence of MRSA, hospitals have had to deal with constantly mutating new strains, each one more virulent than the last, testing our ingenuity in dealing with them, and killing patients we thought could be protected from such infection.

In some regions of the world the malaria parasite is becoming resistant to the anti-malarial drug artemisinin, while drug-resistant tuberculosis has been reported in 77 countries, according to research by the U.S. Centers for Disease Control and Prevention.

In our arrogance we have failed to take account of nature’s resilience, and have also neglected to consider human nature and our instinct to put self-interest above the common good, even if contagion is spread in the process. The behavior of the human race is less easily controlled than bacteria in a petri dish.

In less developed parts of the world, notably Africa, HIV/Aids and other infectious diseases continue to claim nearly 10 million lives a year. Global political directives and programmes to prevent and tackle disease are commonly falling short of their objectives for a variety of reasons, including localised corruption, lack of financial support from the wealthy West and misinformation propagated through local superstition or by religious groups.

Tending to the rich

In spite of the good intentions of global leaders, there continues to be a huge disparity between the health risks and care of rich and poor within cities, nations,and regions of the world. The U.S. has more than a third of the world’s health workers, tending the diseases of the affluent: heart disease, stroke, and cancer.

Many of the consuming world’s ills are being caused by people’s excesses, eating too much of the wrong foods, drinking too much alcohol, smoking, or sunbathing. A billion of the world’s people are overweight, a figure that is balanced in the cruelest of ironies by the billion who cannot find enough to eat.

At the same time, the poor of the world often lack access to medical facilities, doctors, and drugs, and also to the basics of safe drinking water, sanitation, and waste disposal. It is estimated that almost half of the developing world’s population live without sanitation, and as increasing numbers of people are living in overcrowded, urban conditions the potential for transmission of infectious disease grows.

The consuming nations had the geological good fortune to be sitting on resources — coal and iron — that could be used to build water and waste disposal systems, but others have been far less fortunate. We now see megacities like Lagos and others with populations of 10 million or more with little or no water or sewage infrastructure, in tropical heat.

For them, the energy to build a modern health infrastructure is a dream that will never materialize: there is too little energy left and it has all become too expensive.

It is also becoming too expensive for the consuming countries of the west, as can be seen in the government cuts in health service budgets now taking place. We have developed extremely successful and innovative medical technologies, a pill for every ill and a physical infrastructure of surgeries, clinics and hospital buildings: all are highly sophisticated luxuries that we can no longer afford and consume vast amounts of energy.

The U.S. Environmental Protection Agency estimates that hospitals use twice as much energy per square foot as a comparable office block, to keep the lights, heating, ventilation, and air conditioning on 24/7 and run an array of equipment from refrigerators to MRI scanners.

But don’t take our word for it. Dan Bednarz, PhD, health-care consultant and editor of the Health after Oil blog, presented his view of the future at a nurses’ conference in Pennsylvania, USA:

Fossil fuel costs will continue to rise and eventually the healthcare system will be forced to downsize — just as the baby boomers and (possibly) climate change effects inundate the system.

Without energy input our hospitals and medical systems cannot be maintained at their present levels, and concepts of health and care become very different.

We are already seeing a resurgence of alternative medical therapies, often using herbs similar to those in the historic pomander. This foreshadows what will happen in your post-industrial future as well-fed health and wellbeing give way to weakness and disease, accentuated by poor nutrition, and the energy-driven skills of modern medicine are no longer readily available.

A doctor might have a knowledge of what ails you, but that might be almost his only advantage over his medieval counterpart. Knowing that you need an antibiotic to stop a raging infection will be of little use if there’s no means of getting hold of it.

Just contemplate the “innovative” methods of the surgeons in northern Italy’s medieval universities in the 1400s:

“They washed the wound with wine, scrupulously removing every foreign particle; then they brought the edges together, not allowing wine nor anything to remain within — dry adhesive surfaces were their desire. Nature, they said, produce the means of union in a viscous exudation, or natural as it was afterwards called by Paracelsus, Pare, and Wurtz. in older wounds they did their best to obtain union by desiccation, and refreshing of the edges. Upon the outer surface they laid only lint steeped in wine.” — Sir Clifford Allbutt, regius professor of physic, University of Cambridge

The modern health system has replaced our need to take responsibility for our own bodies. It cannot give us immortality, but it has given us the next best thing: long, safe, and comfortable lives. We built our good health on hydrocarbon energy, but in the future a wealth of factors will make it progressively more difficult for us to exert control over disease as that energy source slips from our grasp.

Disease will become more prevalent, not only in localized outbreaks, but at epidemic and even pandemic levels. Your healthcare system cannot downsize, it’s either there or it isn’t.

[Norman Pagett is a UK-based professional technical writer and communicator, working in the engineering, building, transport, environmental, health, and food industries. Josephine Smit is a UK-based journalist specializing in architecture and environmental issues and policy who has freelanced for British newspapers including the Sunday Times.Together they edit and write The End of More.]